Introducing Manta: Scalable, Modular AI Models for Roleplay and Beyond

As developers and creators push the boundaries of AI-driven storytelling, one challenge remains constant: how to balance speed, depth, and cost across diverse workloads. Some sessions need lightning-fast replies; others demand long-context reasoning and emotional nuance.

That’s why we built Manta — a modular, composite AI model family designed to give product teams a clean, OpenAI-compatible interface while dynamically scaling across workloads behind the scenes.

🧠 What Is Manta?

Manta is MegaNova AI’s next-generation model lineup, engineered for roleplay, simulation, and creative assistant use cases. Each Manta model provides a balance of speed, reasoning power, and contextual awareness, built to integrate seamlessly into your existing workflows through the Chat Completions API.

At launch, Manta ships in three tuned tiers, each optimized for a specific layer of interaction:

| Model | Context Window | Max Output | Ideal Use Case | Pricing* |

|---|---|---|---|---|

| manta-mini-1.0 | 8K tokens | 4K tokens | Chatbots, summarizers, intent detection | Free (with $1 deposit) |

| manta-flash-1.0 | 16K tokens | 8K tokens | Roleplay, knowledge assistants, multi-turn threads | $0.02 / $0.16 per 1M tokens |

| manta-pro-1.0 | 32K tokens | 16K tokens | Deep reasoning, long-form storytelling, RAG pipelines | $0.06 / $0.50 per 1M tokens |

*Billing is per 1M tokens (input + output).

⚙️ Inside Manta’s System Design

Under the hood, Manta implements a composite routing system — a modular orchestration layer inspired by recent advances in LLM system optimization. Unlike cascade-based systems that retry failed generations, Manta’s router makes intelligent, pre-generation decisions.

Routing Strategy Foundation

- Request-Model Matching: Each request is paired with the most cost-effective model that meets its quality threshold.

- Multi-Dimensional Analysis: The router evaluates computational complexity, content safety, and resource availability simultaneously.

- Proactive Health Management: Real-time failover ensures consistent uptime without user-visible delays.

- Context-Aware Scaling: Automatically assigns models based on the conversation’s token footprint, minimizing over-provisioning.

This approach delivers a balance between cost predictability and performance consistency — perfect for developers scaling multi-user roleplay or simulation environments.

Multi-Tier Architecture Strategy

Manta follows a three-tier architecture: Mini → Flash → Pro

Based on research, 80% of real-world requests can be served by smaller models without quality loss, while the remaining 20% benefit from higher-capacity reasoning models.

- Manta-Mini: For high-frequency, low-complexity interactions like chat, summaries, or intent detection.

- Manta-Flash (highly recommend):, balancing reasoning power, context length, and cost. Perfect for roleplay, story continuation, and general AI companions.

- Manta-Pro: The premium reasoning tier for long-form generation, RAG systems, and enterprise-grade complexity.

This structure keeps Manta flexible, developers can scale model usage by task complexity instead of running one heavy model for all queries.

For the System Workflow, you can get more information through our Github.

🧩 Why It Matters

Integrating Manta is as simple as switching one parameter in the Chat Completions API.

- Mini keeps latency low and costs minimal.

- Flash serves as the balanced workhorse for most production workloads.

- Pro unlocks deep reasoning and long-context continuity when your app demands more.

Each tier includes context window guarantees (8K / 16K / 32K), so teams can plan confidently for prompt length and token budgeting.

With unified telemetry and routing logic under the hood, Manta lets you focus on building AI experiences, not managing infrastructure.

💡 Example Use Cases

- Game & Roleplay Systems: Use Manta-Flash for dynamic NPC dialogue and Manta-Pro for story arcs or branching narratives.

- AI Companions: Start lightweight with Manta-Mini for basic chat, and auto-scale to Pro when deeper memory and context are required.

- Enterprise Assistants: Combine Pro-tier reasoning with Mini-tier summarization for fast, cost-efficient hybrid workloads.

🧰 Getting Started with Manta

You can integrate Manta with any OpenAI-compatible client in just a few lines of code. Here I will take Manta-Mini model as an example:

import requests

import os

url = "https://inference.meganova.ai/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {os.environ.get('MEGANOVA_API_KEY')}"

}

data = {

"messages":[

{"role":"user","content":"Is Montreal a thriving hub for the AI industry?"}

],

"model":"meganova-ai/manta-mini-1.0",

"max_tokens":None,

"temperature":1,

"top_p":0.9,

"stream":False

}

response = requests.post(url, headers=headers, json=data)

print(response.json())Quick Preview:

- URL:

https://inference.meganova.ai/v1/chat/completions - Model Name:

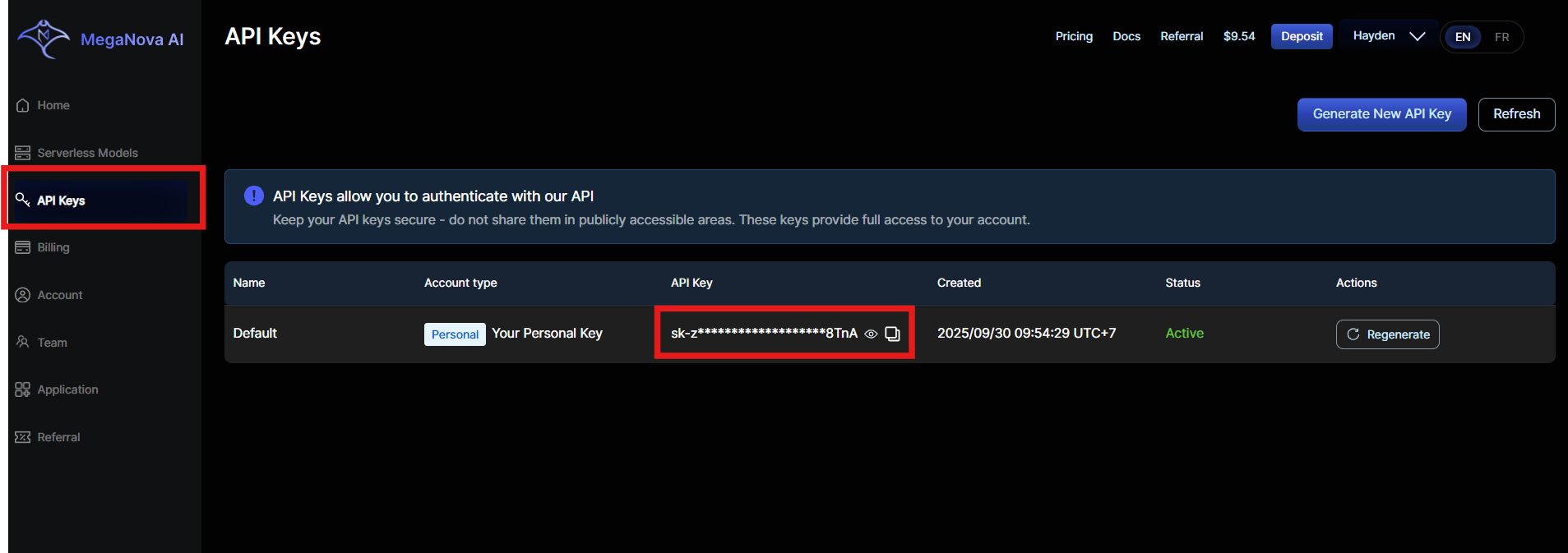

meganova-ai/manta-mini-1.0 - API Key:

That's it. You can now using these config to connect to Roleplay platforms like JanitorAI, SillyTavern or KoboldAI in less than 5 minutes.

Important: Manta-Mini is free to use for all users who deposit $1 into their account. Credits will only be used once the daily free limit is exceeded.

🔭 The Bigger Vision

Manta represents a step toward modular, transparent AI orchestration — where teams can focus on narrative design, user experience, and emotional depth, while the infrastructure handles routing, scaling, and optimization.

By aligning tiered performance with predictable economics, Manta lets developers build scalable, emotionally intelligent roleplay systems without vendor lock-in or unpredictable latency.

Whether you’re crafting AI companions, world simulators, or creative copilots — Manta is your foundation for intelligent, flexible storytelling.

You can explore and test the models for free via the MegaNova AI API

What’s Next?

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: meganova.ai

📖 Docs: docs.meganova.ai

✍️ Blog: Read our Blog

🐦 Twitter: @meganovaai

🎮 Discord: Join our Discord