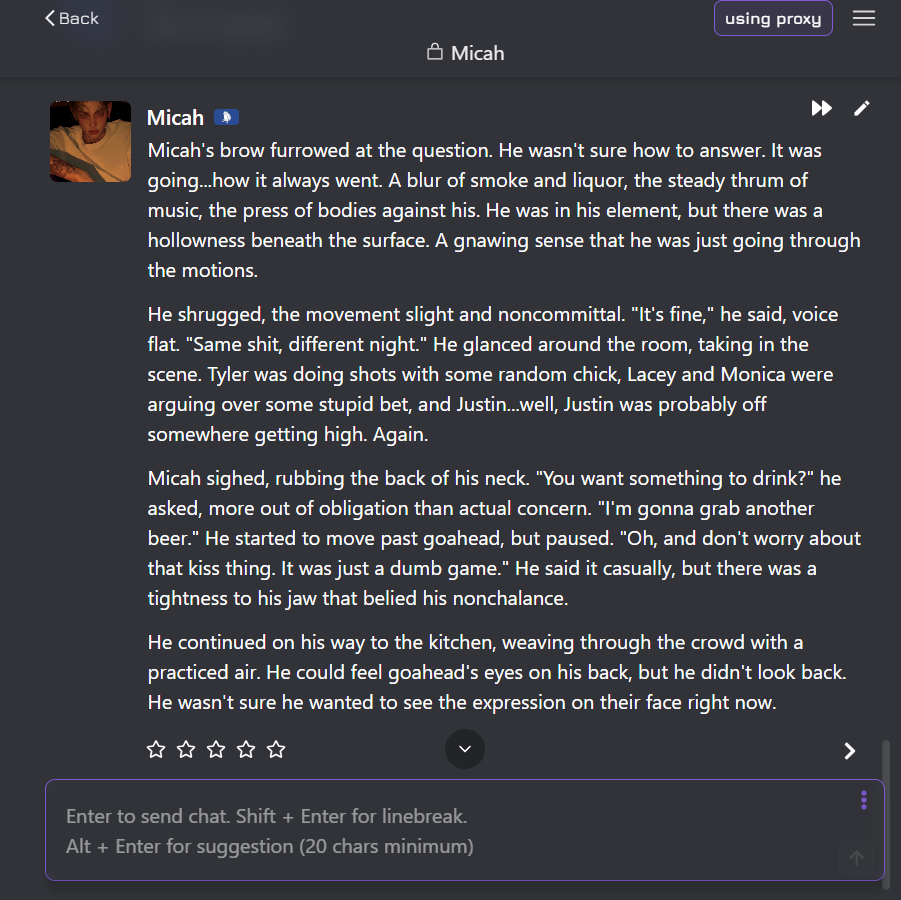

Llama 3.3-70B Proxy for Janitor AI with MegaNova Setup Guide

The Llama 3.3 – 70B model represents a major leap forward in open-source large language models, combining high-fidelity reasoning, contextual depth, and expressive dialogue generation.

Available now via MegaNova AI Proxy, developers and roleplay creators can integrate this state-of-the-art model directly into tools like JanitorAI, SillyTavern, or any OpenAI-compatible client without the friction of complex infrastructure setup.

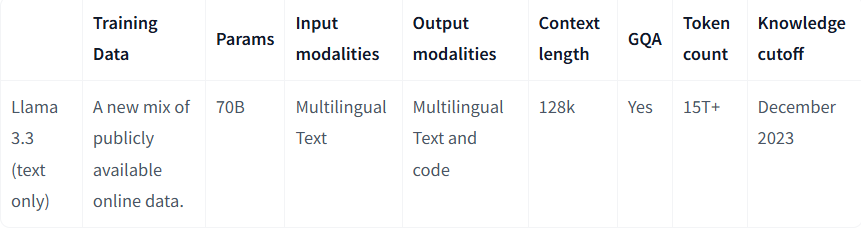

Model Overview

Developed by Meta AI, the Llama 3.3 – 70B model is trained on trillions of tokens and optimized for multi-turn dialogue, creative writing, and advanced instruction following.

It strikes an impressive balance between reasoning strength and language fluency, making it one of the top open-weight models available to the public.

Key Specs:

- Parameters: 70 billion

- Context window: 32K tokens

- Training focus: Instruction tuning, conversational coherence, reasoning tasks

- Specialization: Creative roleplay, world-building, assistant dialogue

- API Availability: Accessible via MegaNova AI Proxy (OpenAI-compatible format)

With its large context capacity, Llama 3.3 – 70B can sustain immersive, memory-rich conversations which ideal for roleplay creators who need continuity and personality depth.

Why Use MegaNova AI Proxy

MegaNova AI provides a secure, high-performance proxy layer for accessing models like Llama 3.3 – 70B through standard API calls.

Whether you’re using JanitorAI, SillyTavern, or your own AI interface, the setup process remains identical to OpenAI’s, you just switch the API URL and key.

Benefits:

- OpenAI-compatible integration

- Fast and stable inference with global routing

- Private & rate-limited API access

- Community support for prompt optimization and troubleshooting

This allows developers to focus on storytelling or product logic, not backend complexity.

Setup Guide

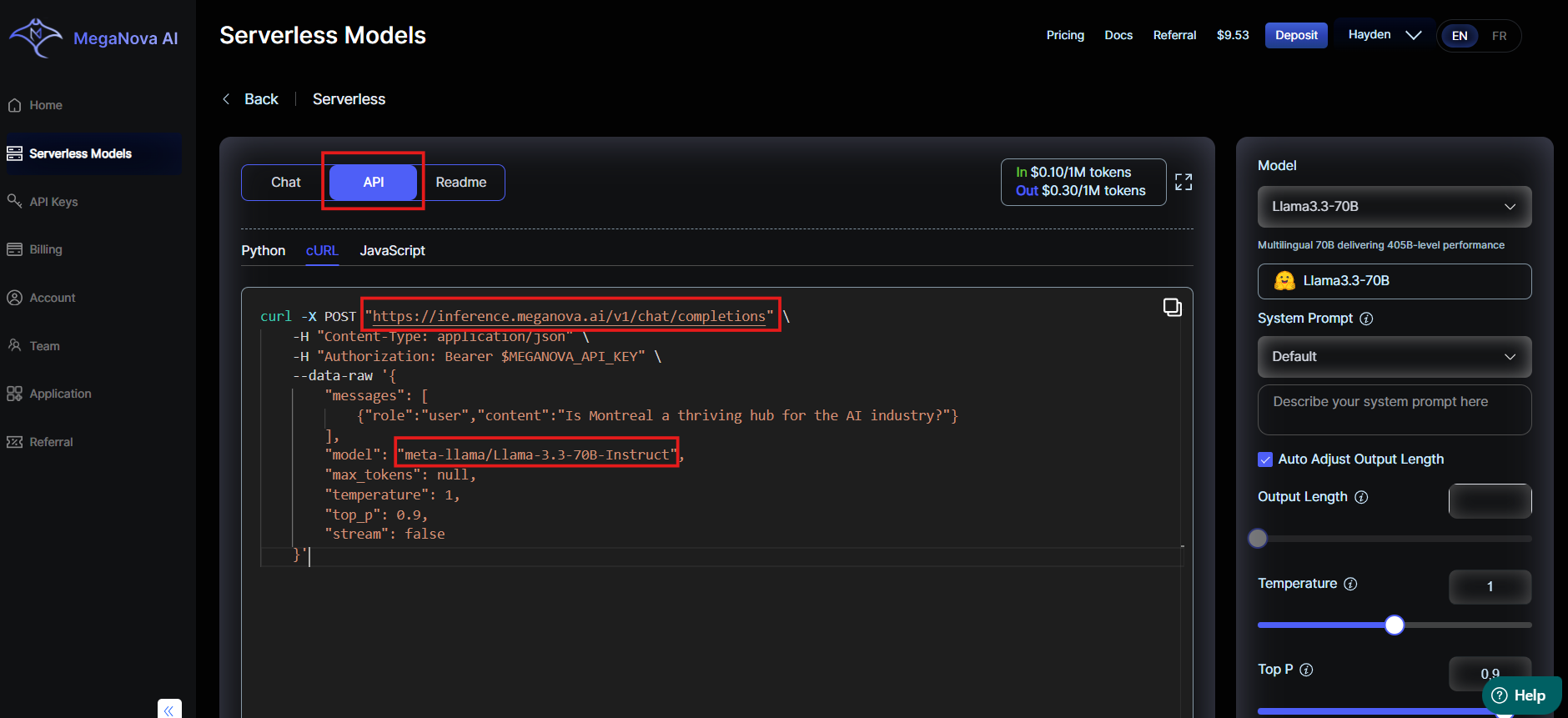

You can connect Llama 3.3 – 70B to your favorite AI frontend in just a few minutes.

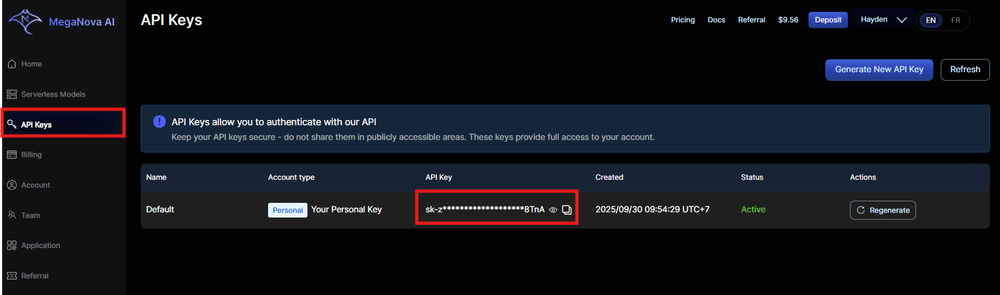

- Create MegaNova AI account and log in the dashboard

- Copy your API key.

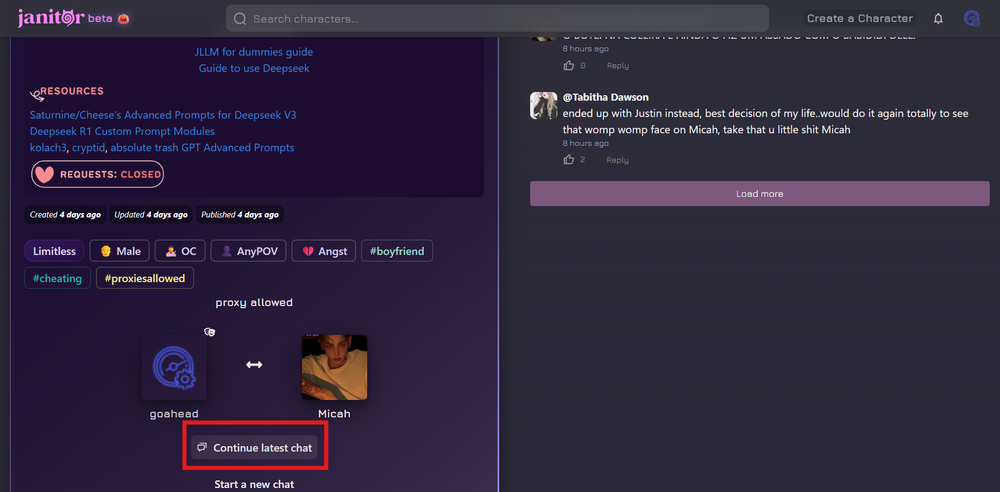

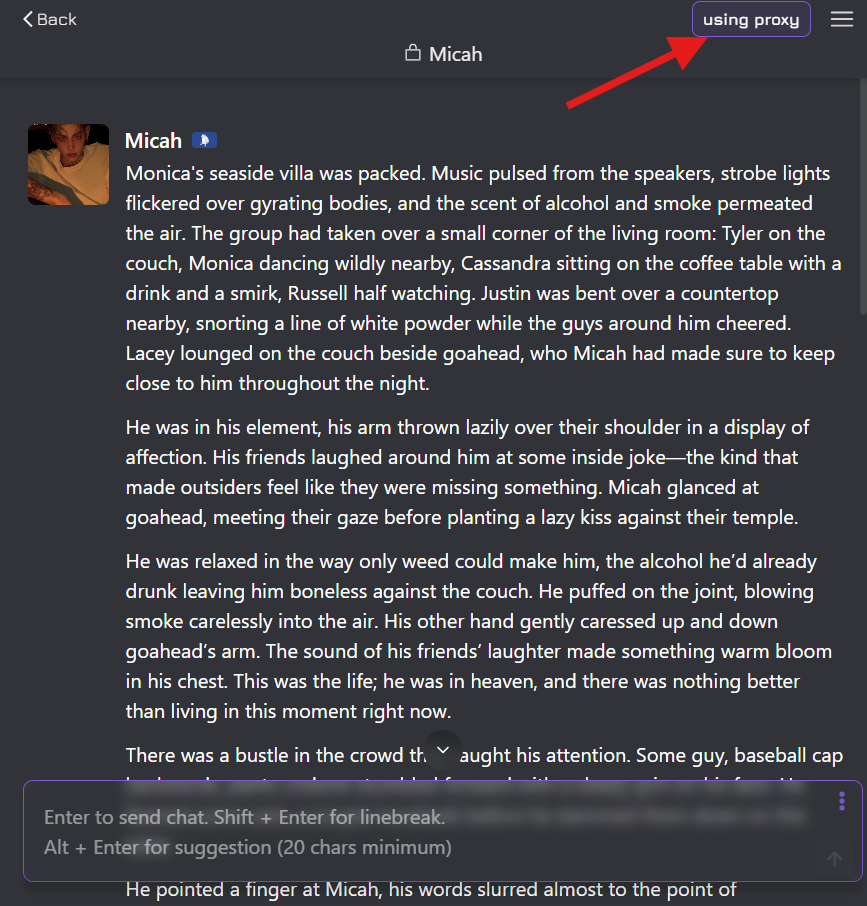

- In JanitorAI, select Character:

- Start a new chat

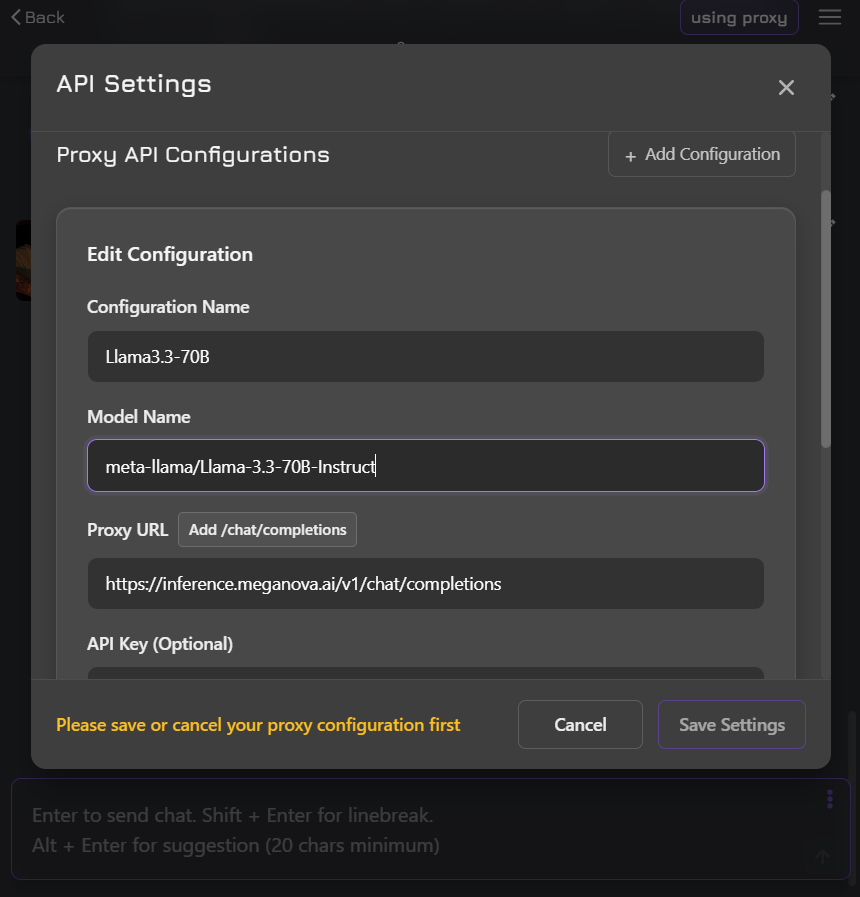

- Using Proxy -> Add Configuration:

- API Type: Select "Proxy".

- Base URL/Endpoint:

https://inference.meganova.ai/v1/chat/completions - Model Name: Use

meta-llama/Llama-3.3-70B-Instruct - API Key: Paste your

sk-key from MegaNova.

- Add Configuration and Start your roleplaying

Quick Config Preview from MegaNova AI:

Performance Highlights

Compared to smaller Llama 3 variants, the 70B model:

- Maintains logical consistency over long prompts

- Exhibits stronger persona memory retention in multi-turn dialogues

- Adapts seamlessly to stylistic or emotional tone shifts

- Produces coherent story arcs ideal for roleplay, simulation, and narrative AI

In internal MegaNova AI benchmarks, Llama 3.3 – 70B performs on par with proprietary chat models in character consistency and emotional alignment — all while remaining open-weight and privacy-friendly.

Conclusion

Llama 3.3 – 70B on MegaNova AI brings top-tier roleplay and assistant performance to independent developers, writers, and creative communities.

Whether you’re building interactive characters, fine-tuning world lore, or experimenting with AI-driven storytelling, this model offers powerful reasoning and contextual depth in an accessible, open ecosystem.

What’s Next?

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: meganova.ai

📖 Docs: docs.meganova.ai

✍️ Blog: Read our Blog

🐦 Twitter: @meganovaai

🎮 Discord: Join our Discord

▶️ YouTube: Subscribe on YouTube