GPT-4o-mini Proxy for Janitor AI with MegaNova Setup Guide

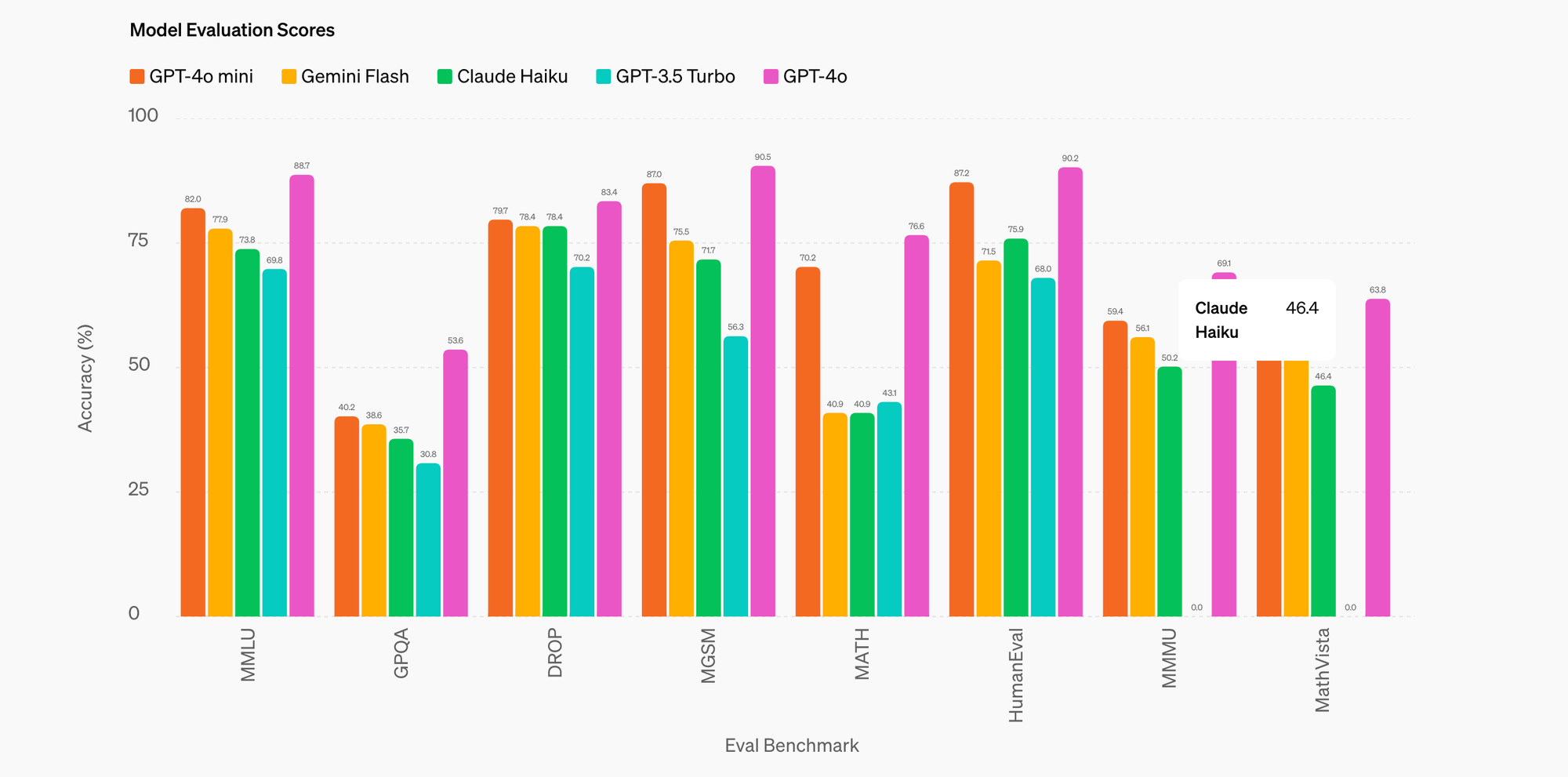

As AI models continue to evolve, efficiency has become as crucial as power. GPT-4o-mini strikes that perfect balance — delivering OpenAI’s multimodal intelligence in a lightweight form that’s faster, cheaper, and optimized for real-time inference.

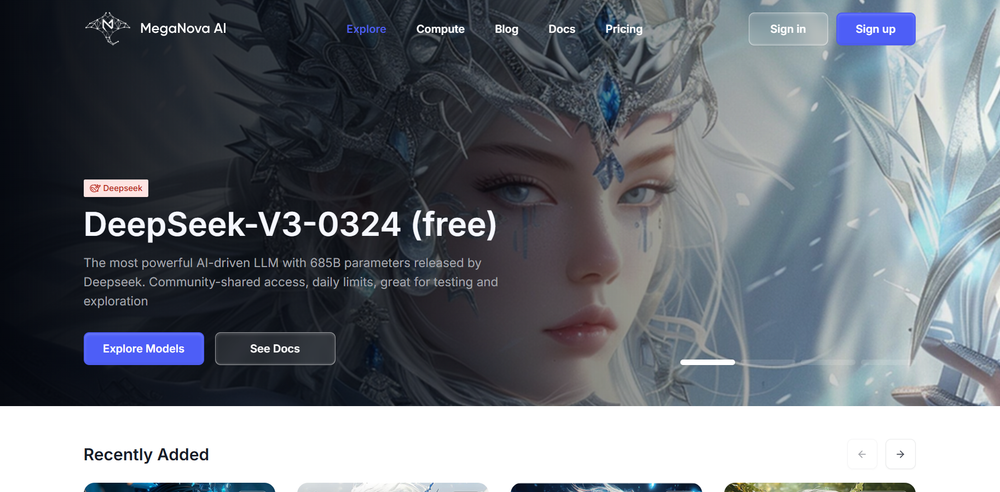

Now available through MegaNova AI Proxy, GPT-4o-mini opens new possibilities for developers who want GPT-4-class reasoning without the heavy cost or latency.

What is GPT-4o-mini?

GPT-4o-mini is a compact variant of GPT-4o, designed for both text and vision tasks. It inherits multimodal capability from GPT-4o, meaning it can handle not just text, but also images, diagrams, and mixed content.

However, it’s tuned for low-latency and high-throughput environments, making it ideal for:

- Fast conversational AI systems

- Chatbots and roleplay agents

- Coding assistants and reasoning tasks

- Real-time content moderation or summarization

In short: GPT-4o-mini brings GPT-4-level intelligence into an affordable and responsive package.

Setup via MegaNova AI Proxy

Getting started is simple. You can access GPT-4o-mini directly through MegaNova’s proxy endpoint, which lets you run inference without needing a full OpenAI API key.

Setup Guide:

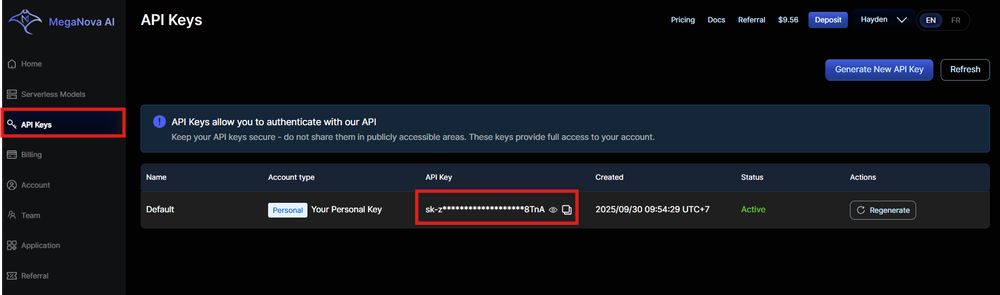

- Create MegaNova AI account and log in the dashboard

- Copy your API key.

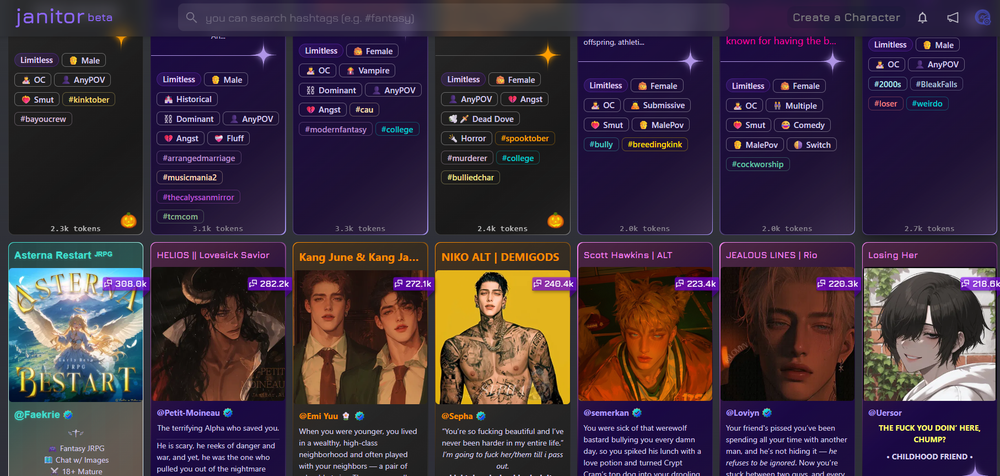

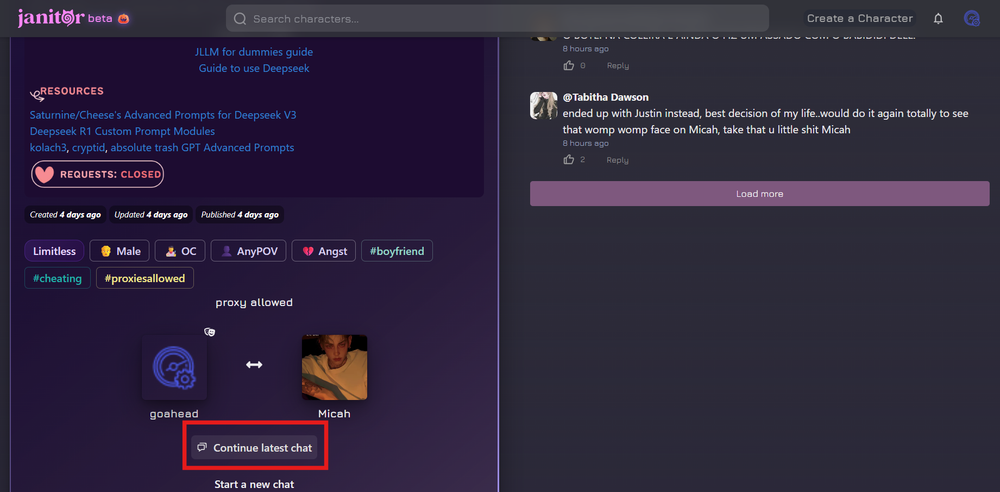

- In Janitor AI, select Character:

- Start a new chat

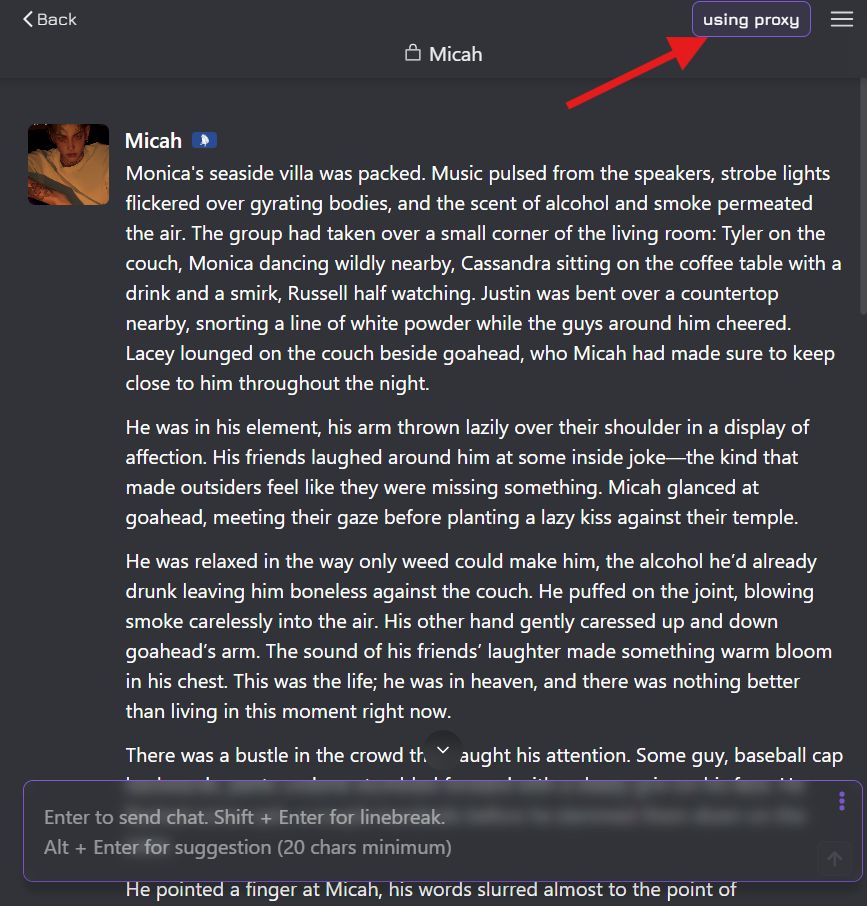

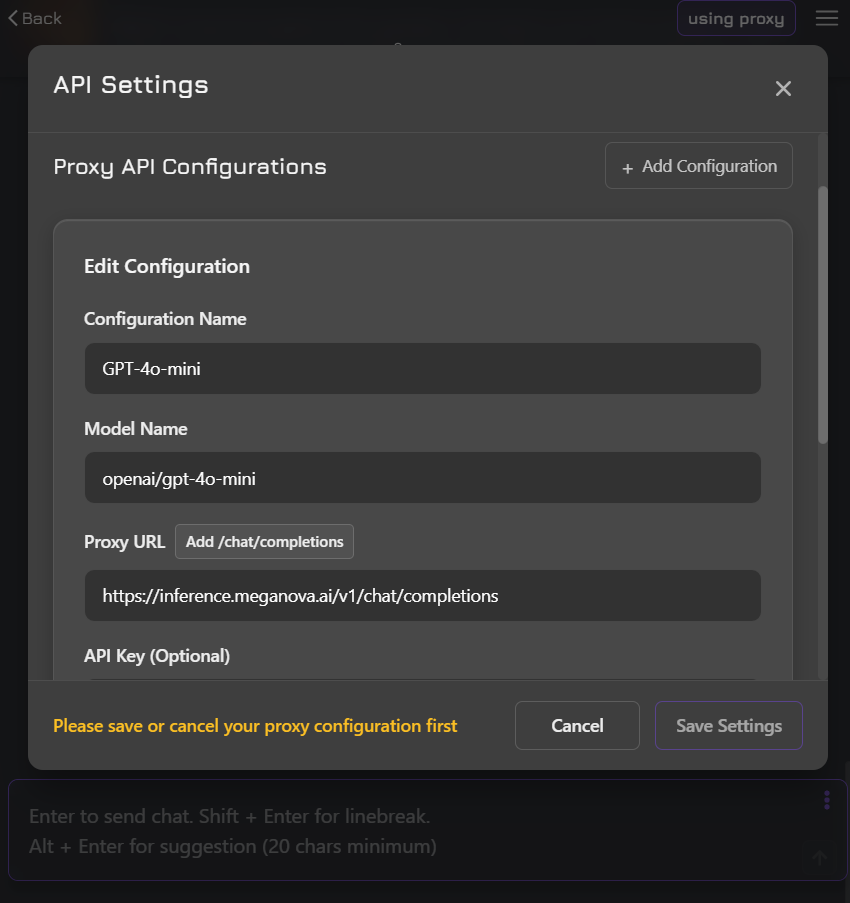

- Using Proxy -> Add Configuration:

- API Type: Select "Proxy".

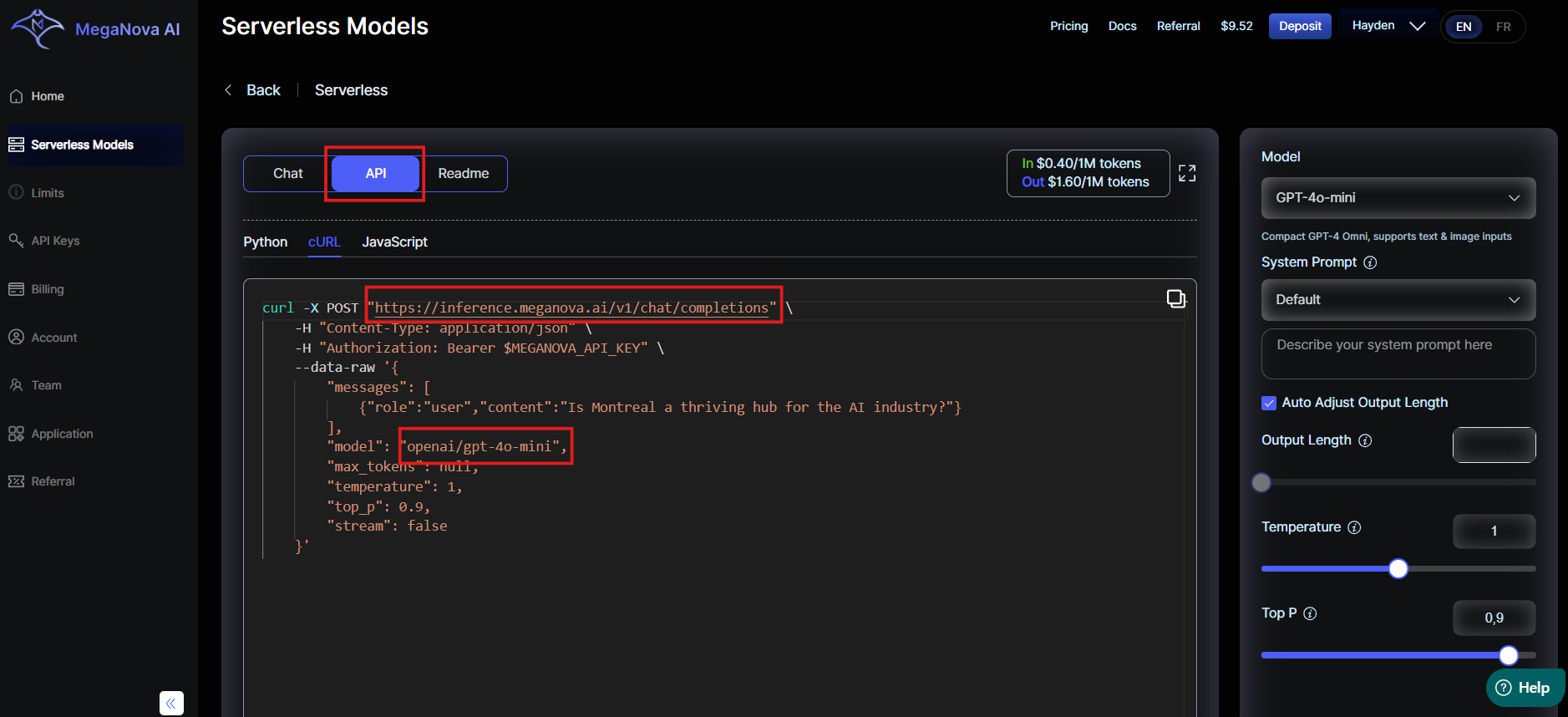

- Base URL/Endpoint:

https://inference.meganova.ai/v1/chat/completions - Model Name: Use

openai/gpt-4o-mini - API Key: Paste your

sk-key from MegaNova.

- Add Configuration and Start your roleplaying

Quick Config Preview from MegaNova AI:

Pricing (via MegaNova)

- Input: $0.40 / 1M tokens

- Output: $1.60 / 1M tokens

Compared to full GPT-4 Omni, this makes GPT-4o-mini one of the most cost-efficient multimodal models available today, ideal for applications that need GPT-4-level quality without enterprise-scale cost.

Why Use GPT-4o-mini on MegaNova?

- Cost-efficient: pay only for what you use, GPT-4o-mini is one of the most affordable GPT-4-class models available.

- Speed-optimized: MegaNova’s proxy handles batching and caching for faster responses.

- Privacy-first: requests stay within the MegaNova proxy layer, without being logged by third-party APIs.

- Multimodal: supports text, code, and image-based prompts in a single pipeline.

Whether you’re building a real-time AI assistant, an interactive learning app, or an internal productivity tool, GPT-4o-mini offers the flexibility you need.

Example Use Case

Imagine deploying GPT-4o-mini as an AI tutor in a web app. The model can:

- Interpret diagrams or uploaded screenshots,

- Generate contextual answers based on visual + textual cues,

- Summarize lesson material or code snippets instantly.

It’s multimodal learning reimagined, at a fraction of the cost and latency of full GPT-4o.

Final Thoughts

GPT-4o-mini shows how scaling down doesn’t have to mean scaling back. It retains the reasoning power and multimodal capability of its larger counterpart while bringing inference speed and cost efficiency into focus.

Through MegaNova AI Proxy, developers can easily integrate GPT-4o-mini into any system, from chat experiences to knowledge automation, with no infrastructure overhead.

Try GPT-4o-mini today on MegaNova AI and see just how fast smart AI can be.

What’s Next?

Sign up and explore now.

🔍 Learn more: Visit our blog and documents for more insights.

📬 Get in touch: Join our Discord community for help or Contact Us.

Stay Connected

💻 Website: meganova.ai

📖 Docs: docs.meganova.ai

✍️ Blog: Read our Blog

🐦 Twitter: @meganovaai

🎮 Discord: Join our Discord

▶️ YouTube: Subscribe on YouTube